WWDC25: Meet the Foundation Models Framework | Apple

Discover Apple’s Foundation Models framework, enabling powerful on-device large language models across macOS, iOS, iPadOS, and VisionOS with privacy-first, efficient AI integration for developers.

Discover Apple’s Foundation Models framework, enabling powerful on-device large language models across macOS, iOS, iPadOS, and VisionOS with privacy-first, efficient AI integration for developers.

Apple has once again raised the bar with the introduction of the Foundation Models framework, a powerful new tool that unlocks on-device large language model (LLM) capabilities across macOS, iOS, iPadOS, and VisionOS. This framework delivers a seamless Swift API to harness the AI model that powers Apple Intelligence, enabling developers to embed sophisticated language understanding and generation directly into their apps without compromising privacy or performance.

Originating from a comprehensive presentation by Apple Developer, this overview delves into the architecture and features of the Foundation Models framework, highlighting its core components: the on-device model itself, guided generation for structured Swift output, streaming APIs for responsive user experiences, tool calling for autonomous code execution, stateful sessions for multi-turn context, and developer tools that streamline integration and optimization. This article explores each of these facets in depth, illustrating how this framework can revolutionize app development with generative AI while preserving user privacy and maintaining efficient performance.

At the heart of the Foundation Models framework lies a large language model specifically designed to run efficiently on Apple devices. Unlike server-scale models, this device-scale LLM boasts three billion parameters quantized to two bits, making it several orders of magnitude larger than any other model previously embedded in the operating system. This enables a wide variety of language tasks such as content generation, summarization, classification, and extraction—all performed locally on the device.

However, it is crucial to understand the model’s optimized scope and limitations. While it excels at many natural language processing tasks, it is not intended for advanced reasoning or extensive world knowledge that large server-based LLMs typically handle. Instead, developers are encouraged to break down complex tasks into smaller, manageable subtasks to align with the model's strengths. This approach fosters more reliable and efficient results within the constraints of device-scale computation.

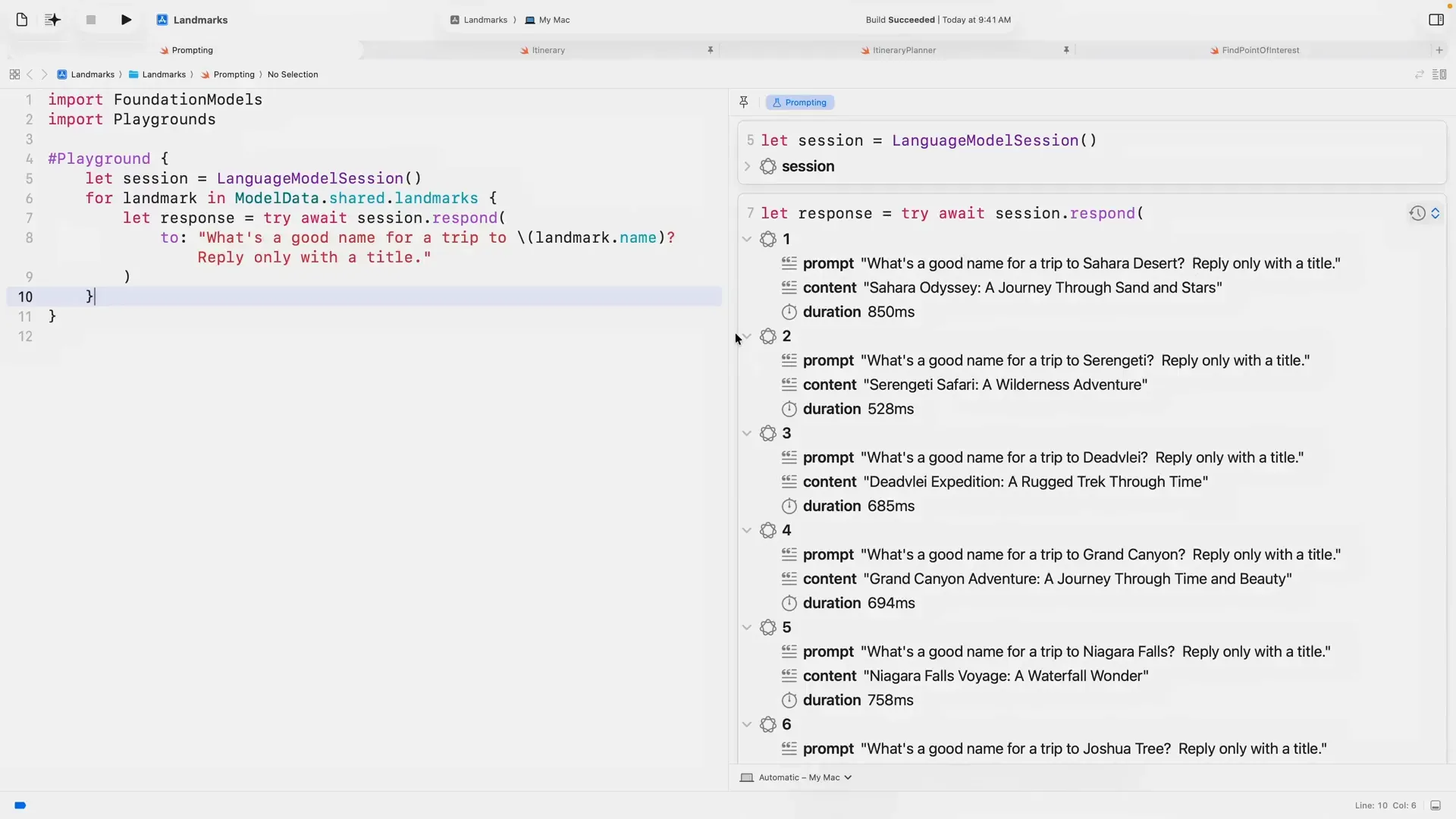

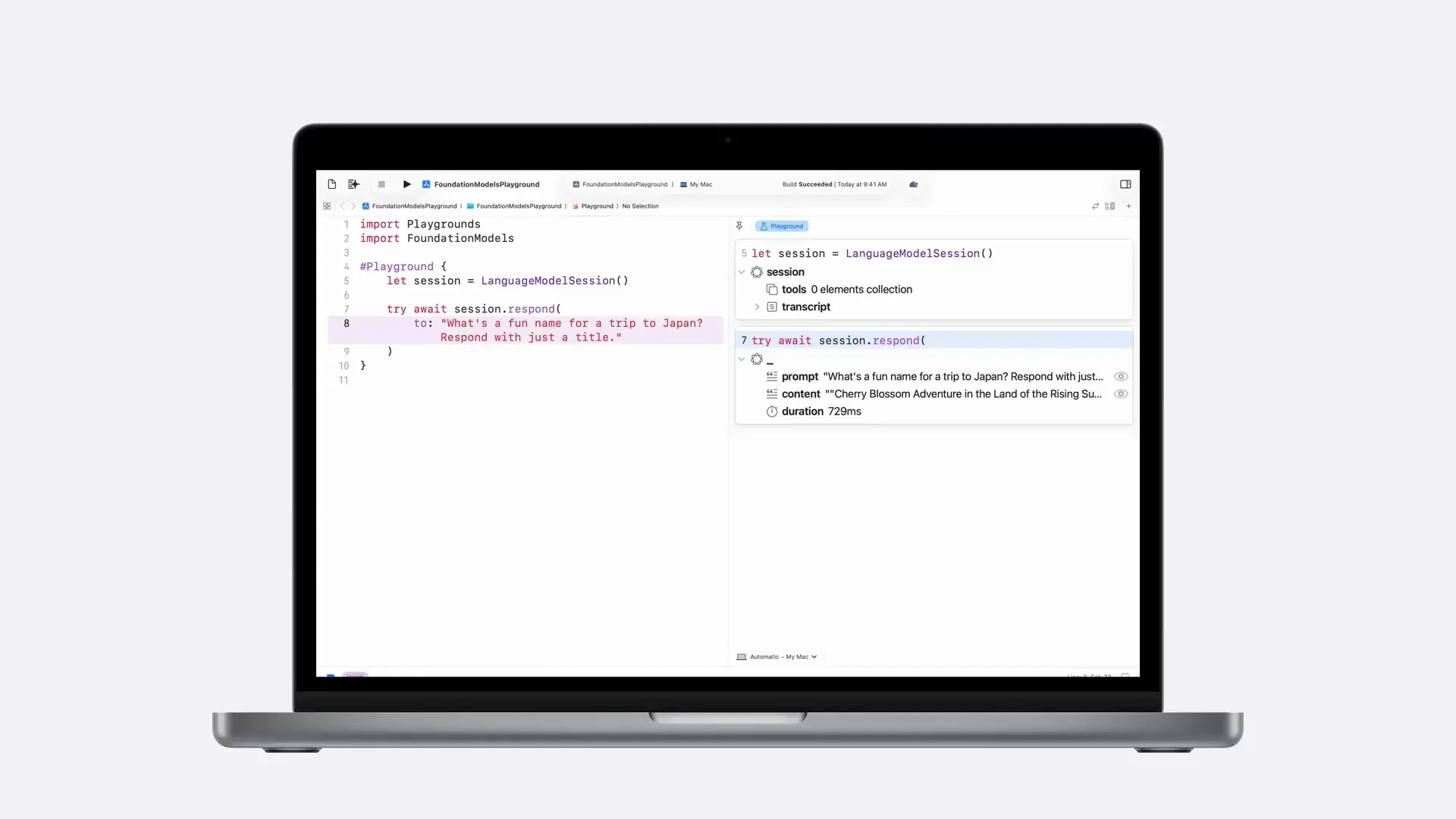

Developers can experiment with the model interactively using Xcode’s new playgrounds feature. This environment allows rapid iteration on prompts with immediate feedback, enabling fine-tuning of input phrasing to achieve the best responses. For example, generating trip titles for various landmarks can be done in a loop, displaying results dynamically in the canvas, showcasing the model’s flexibility and responsiveness.

To enhance performance in specialized domains, Apple also provides adapters—custom-trained extensions that maximize the model’s capabilities for specific use cases like content tagging. These adapters improve accuracy and relevance for targeted tasks, with Apple committed to ongoing improvements driven by developer feedback and usage data.

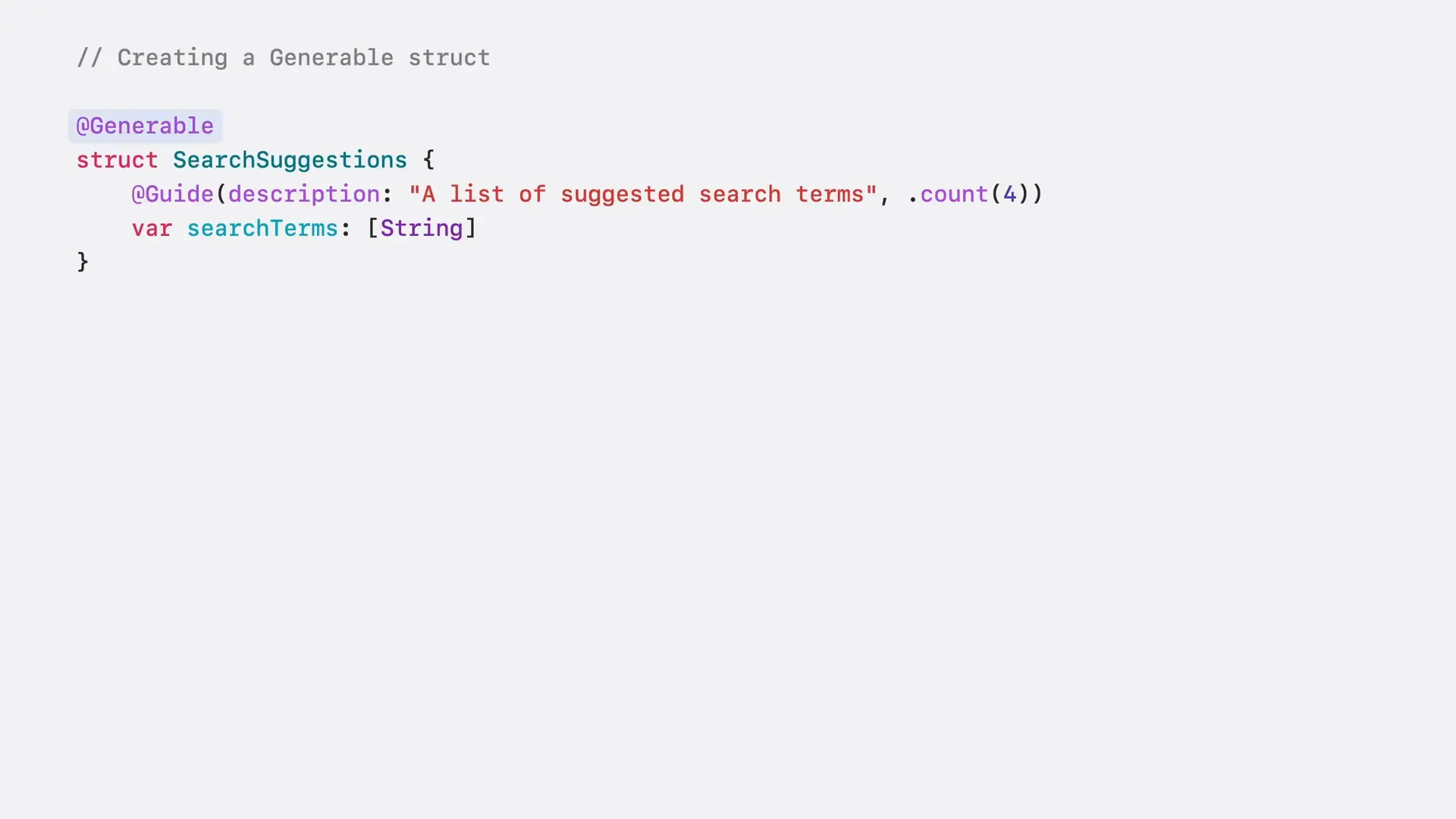

One of the standout features of the Foundation Models framework is guided generation, which fundamentally transforms how developers interact with LLM output. Traditionally, language models produce unstructured text that is easy for humans to read but difficult to parse programmatically. Developers often resort to instructing the model to output JSON or CSV, but this approach is brittle and error-prone due to the probabilistic nature of language models.

Guided generation solves this by integrating Swift’s powerful type system directly with the model’s output through two new macros: generable and guide. The generable macro allows developers to define Swift types that the model will instantiate, while guide provides natural language descriptions and constraints on property values. This combination guarantees structurally correct output by leveraging a technique called constrained decoding.

For example, instead of prompting the model with complex instructions to produce a JSON object, developers can define a Swift struct representing the desired data schema. The model then generates an instance of this struct directly, which can be safely and easily mapped to UI components. This eliminates guesswork and the need for fragile string parsing hacks, streamlining development and improving reliability.

Guided generation supports primitive types such as strings, integers, floats, booleans, and decimals. It also handles arrays and nested composite types, including recursive structures, which opens up advanced use cases like generating dynamic user interfaces. The framework’s deep integration with Swift ensures that prompts can focus purely on what content to generate, rather than how it should be formatted.

This approach also enhances model accuracy and speeds up inference, thanks to optimizations enabled by the close collaboration between Apple’s operating systems, developer tools, and model training pipelines. Developers interested in more advanced usages, such as runtime dynamic schemas, are encouraged to explore Apple’s dedicated deep dive resources.

Latency is a critical factor when working with LLMs, especially on-device models where resource constraints exist. The Foundation Models framework introduces an innovative streaming API that addresses this challenge elegantly by streaming structured output as it is generated.

Unlike traditional delta streaming—which delivers incremental raw text chunks (tokens) requiring developers to accumulate and parse them—this framework streams incremental snapshots of partially generated structured responses. Each snapshot reflects a partial state of the full response, with optional properties that fill in progressively as generation proceeds.

This snapshot streaming approach harmonizes perfectly with SwiftUI and other declarative UI frameworks. Developers can bind UI state directly to these snapshots, enabling live updates that animate and transition smoothly as data arrives. This transforms potential waiting times into engaging moments, enhancing user experience.

To implement streaming, developers create state variables holding the partiallyGenerated type (an auto-generated mirror of the full model output type with optional properties). They then iterate asynchronously over the stream response, updating the UI in real-time as the model produces more content.

Best practices for streaming include:

For example, placing summary fields last in a struct often yields better summarization results. Apple’s documentation and advanced tutorials provide further guidance on integrating streaming effectively.

Tool calling is a groundbreaking feature that extends the Foundation Models framework beyond passive text generation. It empowers the model to autonomously execute code defined within the app, enabling dynamic, context-aware responses that can incorporate real-time data and perform actions.

This capability is transformative because it lets the AI decide when and how to use tools based on the task at hand, a decision that would be challenging to encode manually. For instance, a travel app can expose MapKit-powered tools to fetch restaurant or hotel information dynamically. The model can then invoke these tools, receive structured outputs, and cite authoritative sources, reducing hallucinations and improving factual accuracy.

The tool calling flow involves several steps:

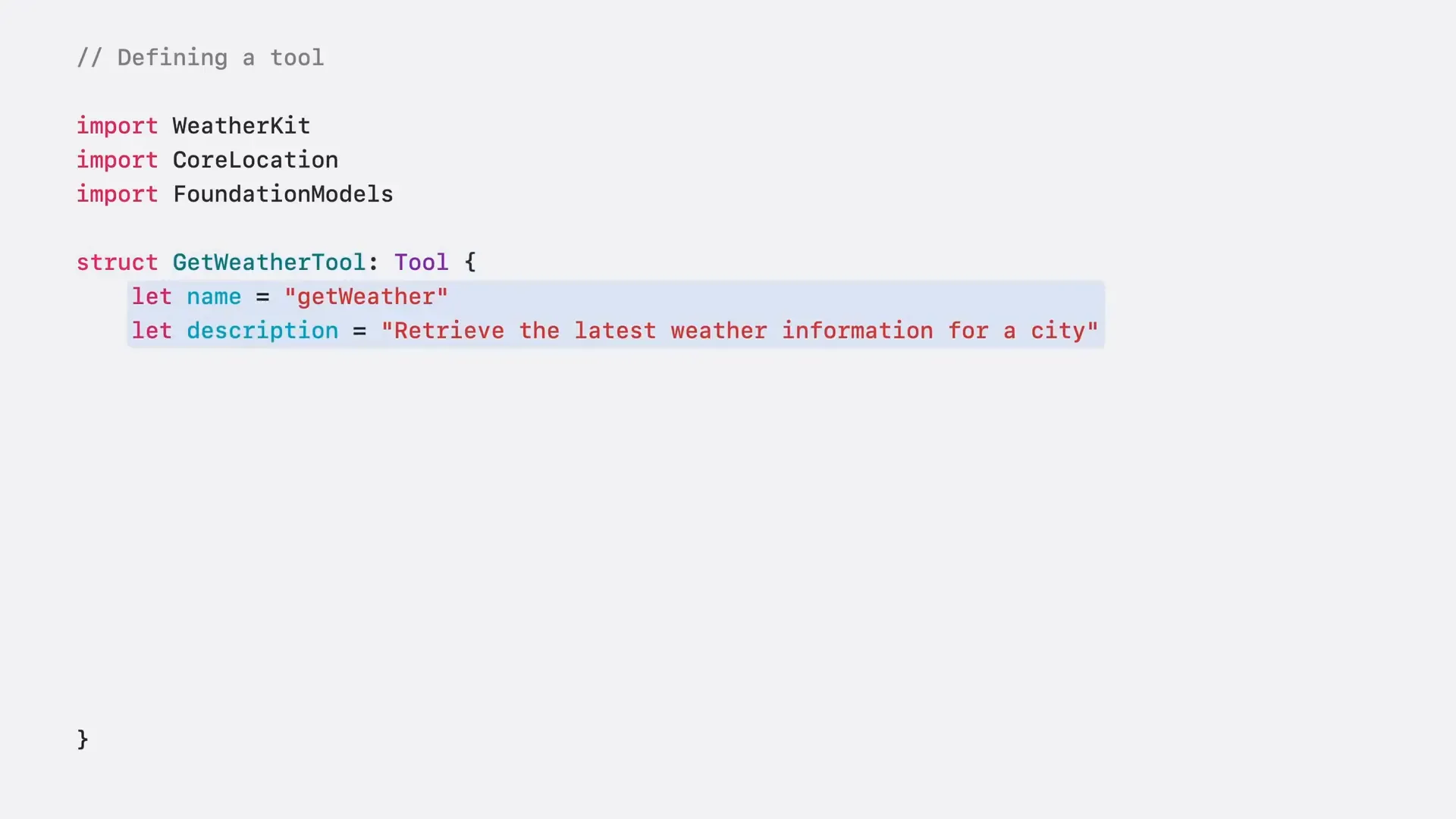

Defining tools is straightforward. Developers create types conforming to the Tool protocol, specifying a name, description, and a call method with typed arguments. The call method’s body can leverage any native APIs, such as Core Location and WeatherKit, to gather and compute data. Outputs are encapsulated in ToolOutput types, which can represent structured data or plain text.

Tools must be registered with a session at initialization, after which the model can invoke them transparently during interaction. This setup supports both compile-time type safety and dynamic runtime schemas for more flexible scenarios, catering to a wide range of application needs.

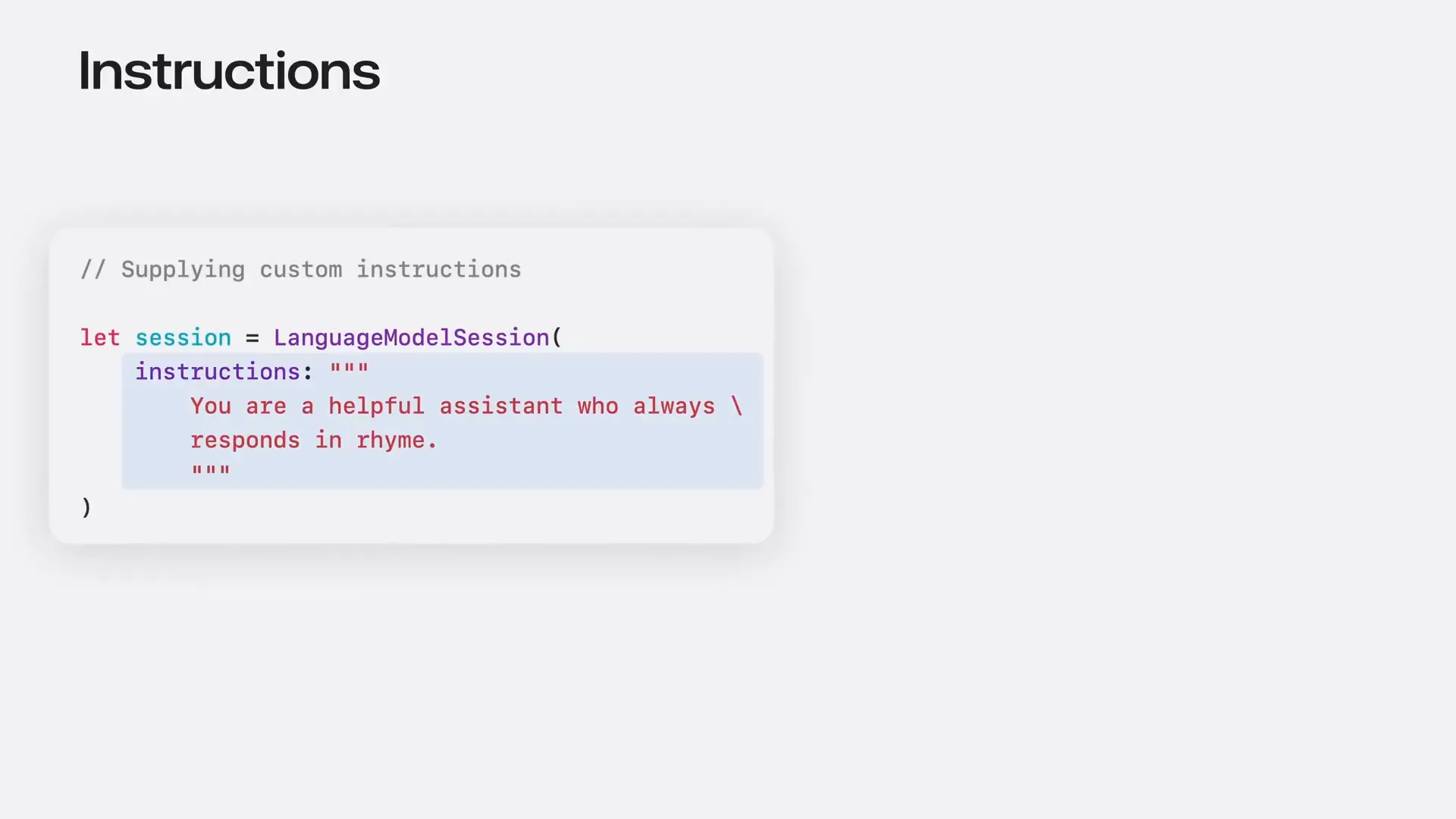

The Foundation Models framework is built around the concept of stateful sessions, which maintain context across multiple exchanges with the model. This is essential for creating coherent, conversational experiences where the model remembers previous interactions and responds appropriately.

When a session is created, it initializes with the general-purpose on-device model and optional custom instructions. Instructions define the model’s role and response style, such as tone or verbosity, and are distinct from prompts, which typically come from users. This separation enhances security by prioritizing instructions over user prompts, mitigating prompt injection risks.

Each interaction is appended to the session’s transcript, which the model references to maintain awareness of conversation history. For example, if the user says “do another one” after generating a haiku, the model understands the context without needing explicit repetition.

During response generation, the session’s responding property indicates whether the model is still producing output, helping developers manage UI state and prevent overlapping prompts.

Apple also offers specialized adapters for built-in use cases, such as content tagging, which supports tag generation, entity extraction, and topic detection with first-class guided generation integration. These adapters can be customized with instructions and output types to detect nuanced elements like actions and emotions.

Before creating sessions, it is advisable to check model availability since the on-device models run only on Apple Intelligence-enabled devices in supported regions. The framework provides detailed error handling for scenarios like guardrail violations, unsupported languages, and context window overflows to ensure robust user experiences.

Apple has designed the Foundation Models framework with developer productivity in mind. The Xcode playground macro allows developers to prompt the model directly within any Swift file, enabling rapid prototyping and iteration without rebuilding the entire app. This tight integration simplifies testing and refining prompts and data structures.

Latency profiling is integral to optimizing LLM-powered apps. The new Instruments app profiling template helps developers measure and analyze model request latencies, identify bottlenecks, and quantify performance improvements. Understanding latency distribution aids in tuning prompt verbosity and deciding when to pre-warm models for smoother user experiences.

Apple encourages developers to contribute feedback via Feedback Assistant, providing an encodable feedback attachment structure to submit rich diagnostic data. For machine learning practitioners with specialized requirements, Apple offers an adapter training toolkit to create custom adapters tailored to unique datasets and use cases, with the caveat that maintaining these adapters requires retraining as Apple updates core models.

The Foundation Models framework represents a significant leap forward for integrating generative AI directly on Apple devices, balancing powerful language capabilities with privacy, efficiency, and developer friendliness. By embedding a large, optimized language model locally, Apple empowers developers to create intelligent, context-aware features that run offline and keep user data secure.

Guided generation’s marriage of Swift’s type system with natural language prompts sets a new standard for reliable, structured AI output, eliminating common parsing pitfalls and accelerating development. Streaming snapshots redefine user interaction by turning AI latency into a dynamic opportunity for engagement through smooth, real-time UI updates.

Tool calling extends this paradigm further, allowing apps to expose native functionality to the model, enabling autonomous decision-making and integration with live data sources. Stateful sessions provide the conversational memory essential for coherent multi-turn dialogue, while specialized adapters enhance domain-specific accuracy.

Apple’s comprehensive developer tooling—from playground macros to latency profilers and feedback channels—rounds out the ecosystem, making it accessible to both app developers and machine learning practitioners. As the framework evolves with continuous improvements and community input, it promises to open new horizons in personalized, intelligent app experiences.

Ultimately, the Foundation Models framework invites developers to rethink how AI can be embedded at the core of apps, shifting from server-dependent to on-device intelligence that is private, performant, and deeply integrated. The future of app development on Apple platforms looks more intelligent and responsive than ever before.

Source : WWDC25: Meet the Foundation Models framework | Apple